My Notes on AI on the Lot 2025 Part 1

Day 1 Opening Remarks:

There were some technical difficulties at first, but I do remember a quote about an axe that I had to look up later:

“Give me six hours to chop down a tree and I will spend the first four sharpening the axe.” ― Abraham Lincoln

Speakers: Conference Director and AILA Executive Director Todd Terrazas, then Culver City Mayor Dan O'Brien.

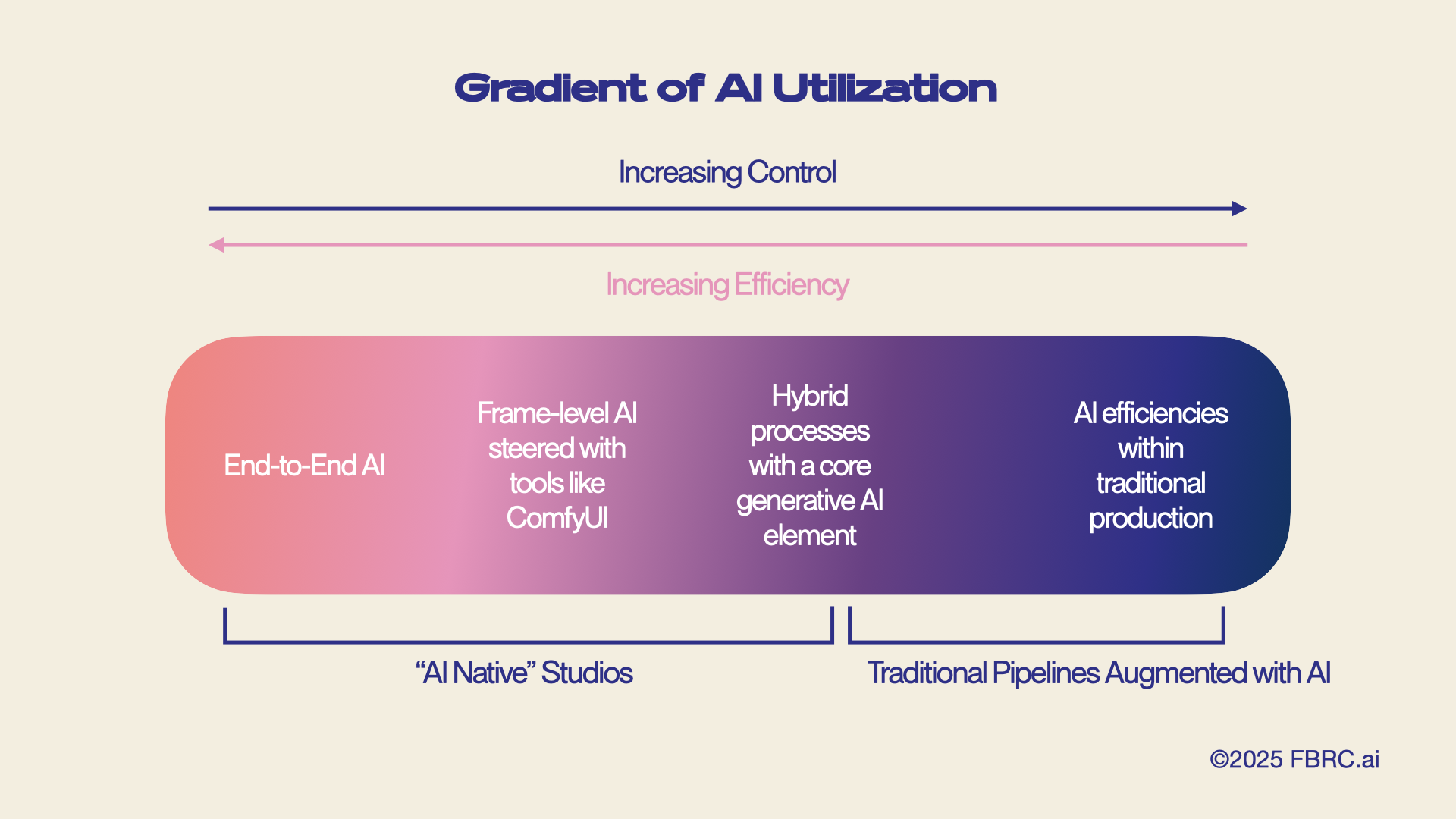

Todd talked about how media consumption has changed. Traditional filmmaking is on one end of the spectrum , and “AI native” is another.

You kind of already heard this with AWS: you have Traditional data centers versus Cloud Computing. You could run your business in your own data center, or you can run it in the cloud, or take a “hybrid” approach.

Hybrid approaches are usually the way people go. It is becoming increasingly common to do some traditional filmmaking while using AI.

Plus, unless people build their own data centers locally, AI runs in a “cloud” we do not control, but is controlled by OpenAI or Amazon or Google.

There was one slide from FBRC.ai I thought was good because it reminded me of my AWS trainer days. I saw it once, then I saw more speakers use it in their presentation, and I later found it on IndieWire.

Todd says AI LA seeks to helps creators collaborate and do what they can to revitalize the entertainment industry in California. He cited Ben Affleck at DealBook, who basically talked about how creator-led studios could change Hollywood.

Last year the conference was a day. This year it was two and a half days, and includes Labs to work with AI artists and generative AI. “Gen Jam” is a chance for attendees to bring their laptops and participate in a workshop, get hands on with AI in collaboration with artists. It reminded me of Per Scholas, because you have lectures, then you have hands-on lab. I had ideas about how cool that sort of conference could be.

Dan, the mayor of Culver City, he used to work as an editor. At 54 years old, he said even he was embracing the change. He mentions how SXSW started small, and now it’s big. Culver City has Asian film festivals, African festivals, now an AI filmmaking community, and he hopes there will be more growth and revitalization in Culver City.

Keynote: State of NVIDIA AI in M&E

Speaker: Rick Champagne, Director of Media, NVIDIA (NVIDIA author blog)

Rick has a really long work history. He says to understand Artificial Intelligence, first we need to understand NVIDIA’s role in AI.

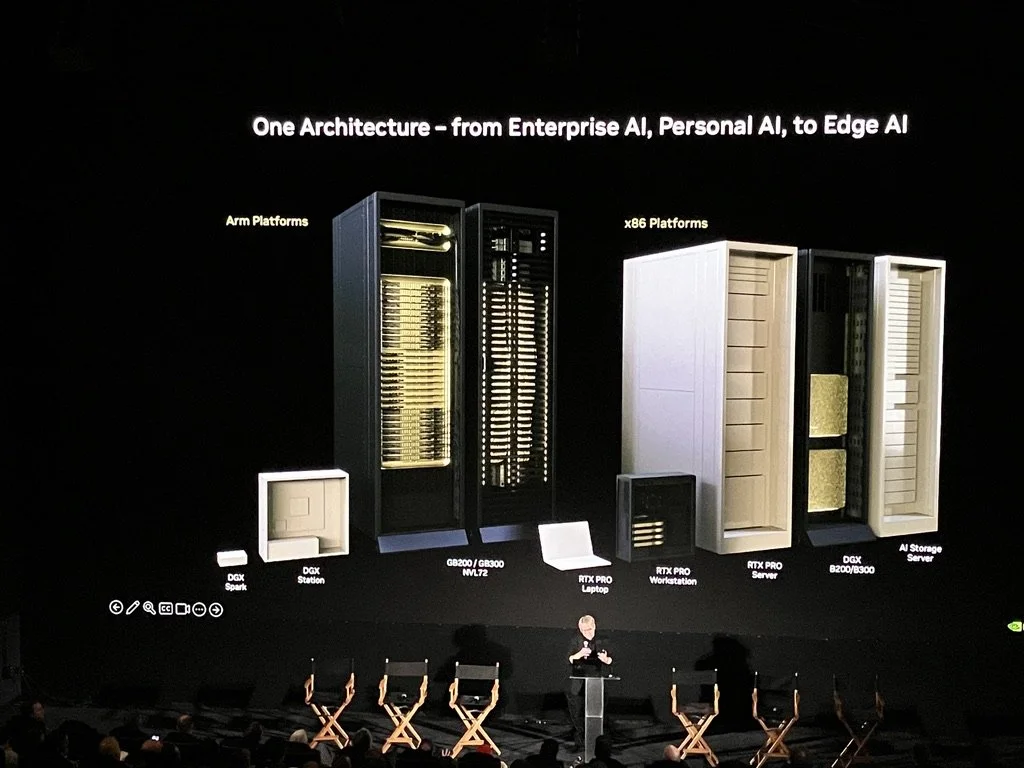

The RTX PRO - Blackwell architecture, powerful GPU. Gddr7 memory. New streaming multiprocessor. Lightning fast video processing.

These look so expensive. He showed a video. I recorded some of it on my phone. Basically, more geometry, better visuals, better image quality.

Then Rick gave a list of categories for all these different types of artificial intelligence:

2012 there was alexnet

perception AI (speech recognition, medical imaging)

generative AI (digital marketing, content creation)

agentic AI (coding assistant, customer service, patient care)

physical AI (autonomous vehicle, robotics)

AI agents built with multiple apps can solve complex problems. Developers are at forefront of building AI, from data prep and training and so on.

It reminded me of a quote from Brad Stone’s The Everything Store, where he quoted Jeff Bezos talking about AWS: “Developers are alchemists and our job is to do everything we can to let them do their alchemy.”

NVIDIA went from a GPU company to an AI infrastructure company.AI Data Centers could be operated from a “single pane of glass.” Nice to hear from a IT management perspective.

NVIDIA optimized the whole stack, trying to take ten server racks and optimize down to one rack. They are preparing for software developers, network engineers, interconnects between GPUs and switches, sustainable electricity planning, trying to use less storage, anything they can optimize for NVIDIA partners like Dell.

Sovereign AI - Data sovereignty is relevant to everyone. These paradigms and best practices that Rick’s guys are working on will be shared with producers/consumers like us once they are ready to be shared

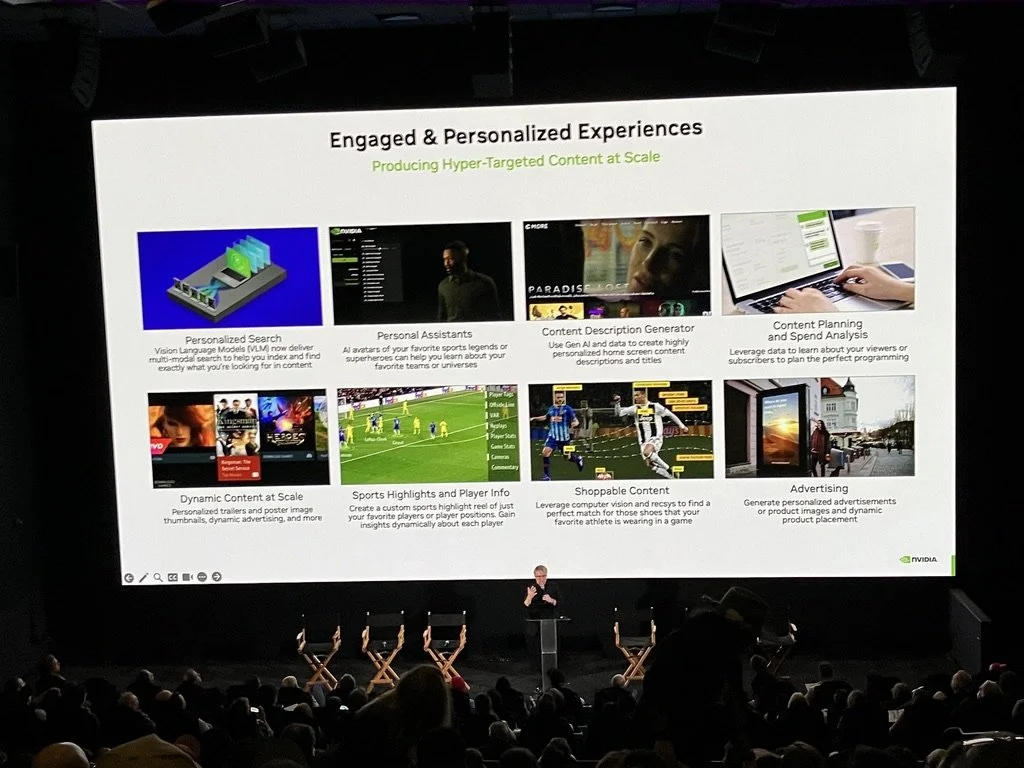

So back to Media & Entertainment - Rick noticed people care about content creation tools a lot at conferences, but there’s something else that’s interesting: 80% of what we discover to watch next is from AI recommendations.

Evan Shapiro has written about the user centric era of media for a while, and Rick seems to agree.

AI will help us out with personalized search, personal assistant, content description generator, sports highlights, athlete info finder, shoppable content to buy that athlete’s shoe, personalized ads, etc.

Not everyone sees the game in a stadium. Many will engage with the game at home. A kid in Finland might just see highlights. A right winger just wants to see right wing plays.

NVIDIA is responding to this consumer demand by:

Free ad supported television, FAST

Nvidia Media2 tech stack, building blocks of AI enabled media companies

Holoscan for Media, AI platform for Live Media.

Retrieval Augmented Generation can help - if you are on season 2, use season 1 to train the model to help on 2.

On House of David, the staff increased as AI was being used. AI is adding new departments, not cutting departments.

The Takeaways from Rick’s talk:

Keep experimenting and get started fast. The Act 2 plot twist of the AI hype train is coming in 2025: AI is not the main character, but the storyteller is.

Cloud-Connected Workflows to Empower Storytellers with Gen AI

As someone who taught multiple AWS re/Start classes at Per Scholas (here is a graduation day LinkedIn post), I already had an idea of how powerful cloud-based media workflows could be before going into a panel on cloud-connected workflows with four Amazon executives.

Speakers:

Danae Kokenos, Head of Technology Innovation, Amazon MGM Studios

Gerard Medioni, VP & Distinguished Scientist, Amazon MGM Studios

Chris Del Conte, Director of VFX, Amazon MGM Studios

Rachel Kelley, Principal, Customer Solutions, Amazon Web Services (AWS)

The execs talked about moving away from “prompt and pray” and instead, building tools for creators.

GenAI be additive at the pitch stage, development stage.

You can do more than have a screenplay and passion. You can create actual images from your words by iterating.

You do have to keep iterating, and put your work in a place it can be seen and commented on.

New job title, says Gerard, the scientist at MGM / Prime Video: prompt artist. People who can talk to the tool well are like dragon riders.

Cloud infrastructure is a game changer for editing. Traditionally content is created, then sent to editor, they send it back, and so on.

With cloud, what you can do editing worldwide. A whole team on one movie at the same time around the world. Information transfer is so cheap.

Basically, that slide about AI utilization and cloud computing are very similar: there’s traditional filmmaking, then there’s cloud-based AI filmmaking, and the rest is a spectrum of hybrid.

Going from text to image to video, that traditional workflow could change. Artists can now just start prompting the AI to use different lenses, and lighting, and that is empowering.

My Takeaway From This Talk:

There is no “right way” to use AI, only “effective” or “ineffective” uses

Testing Out OpenAI’s Sora:

I decided to try using OpenAI’s Sora in the lab area of the conference to get hands on. First I used ChatGPT and told it about me and what I do at Per Scholas. Then I asked it for prompts and used this one.

My prompt was work-related:

“a young Black male IT student with dreadlocks sits in a college computer lab. He narrates a tutorial on detecting and removing malware using Windows Defender. He opens Task Manager to show suspicious processes, scans with Defender, and quarantines the malware. The camera cuts between a close-up of his focused face and a clear screen recording. Add tech-themed background music and a bold color palette for visual engagement.

I uploaded it to YouTube Shorts: https://youtube.com/shorts/aYdr-lbpTG4

That’s all for now! Once I get through the rest of my notes I’ll put up another blog about AI on the Lot 2025.

Maybe I’ll go back through my notes from 2024 as well. Cannot wait to see what new AI is coming in 2026!